TableTalk

Awarded Best User Experience Design on ITEE Innovation Showcase 2017

TableTalk on UQ Interaction Expo 2017

TableTalk is a project from the class DECO3850. In this project the group ‘First Responders’ developed TableTalk. TableTalk is a tool to enhance brainstorming and divergent conversations. We saw that groups that are trying to gather ideas have difficulties with trying to expand their inspirations while brainstorming. Previous research shows that adding additional stimuli during a brainstorming session will improve the result, and we set out to create a tool that would do just that, but without the interruptions that are usually present when using conventional computers to assist brainstorming.

First Responders:\ Dineshkran Rajasingam\ Hilal Mudhafar Al-Riyami\ Jordan Hankins\ Muhammad Raihan Saputra

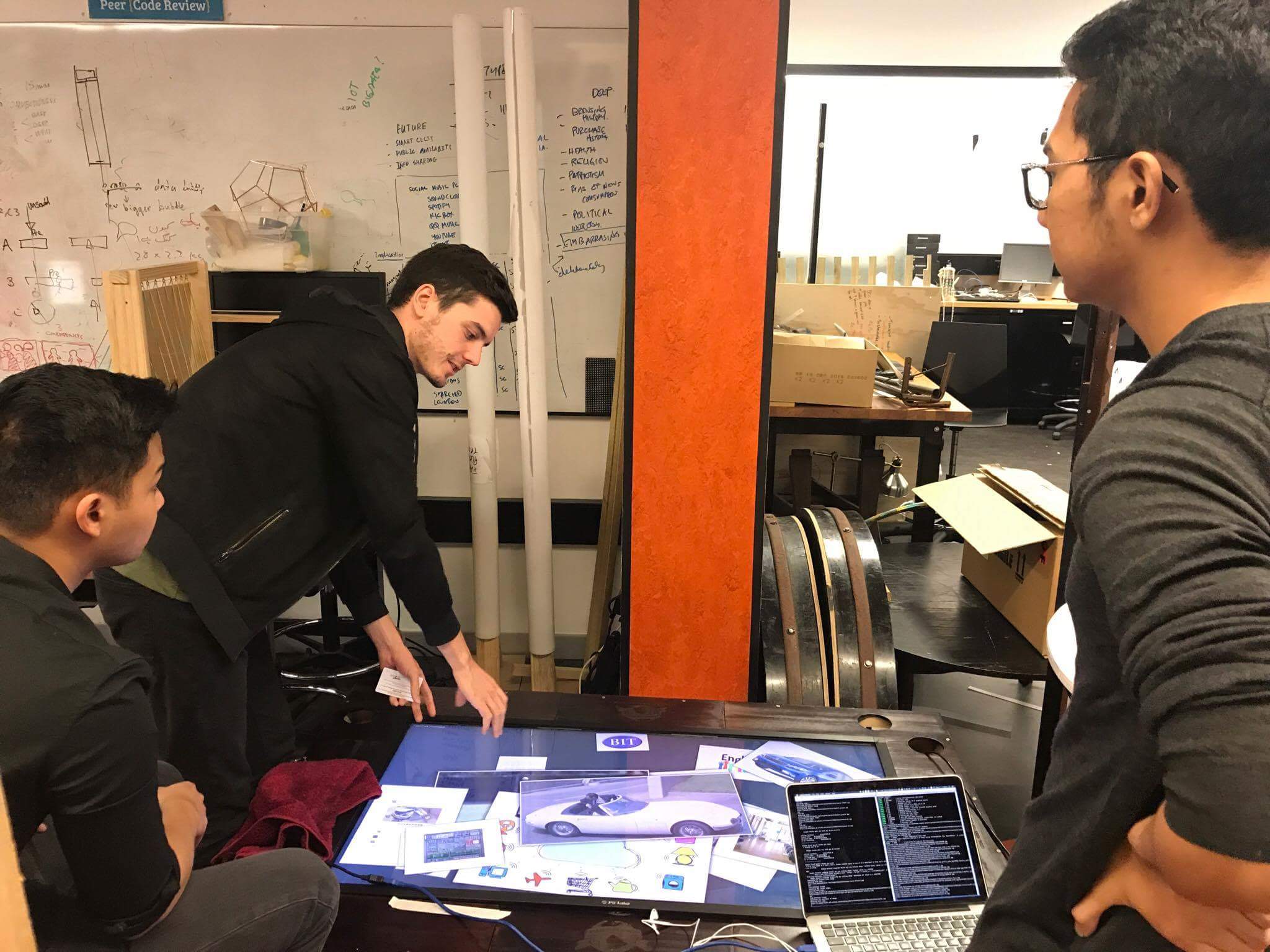

TableTalk is a table with a touchscreen display which responds to your group conversations by displaying relevant pictures on the display. While you are discussing, you can interact with the pictures, grouping them together or dismissing images that are not interesting to you. The table will listen continuously while you talk, so you can discuss normally as you would without TableTalk.

Conversation Table

Reflect

Talk Table

Initial Concept

We were inspired by group conversations so our process started to explore the dynamics of conversation. At first we want to measure the contribution of each participant in the group. But we are stuck on measuring the contribution itself. The total time of a participant talking is not the same as their contribution to the discussion. It has also been done before by the Reflect (Bachour, 2007), Conversation Table (Cherubi, 2006) and Talk Table (Yunho, Choi, and P. Karam, 2009). So instead of measuring the dynamics of the conversation, we set out to improve the conversation.

Development Process

After examining literatures about brainstorming and observing our own group discussions we realise that brainstorming with additional stimuli would help a lot in solving problems.

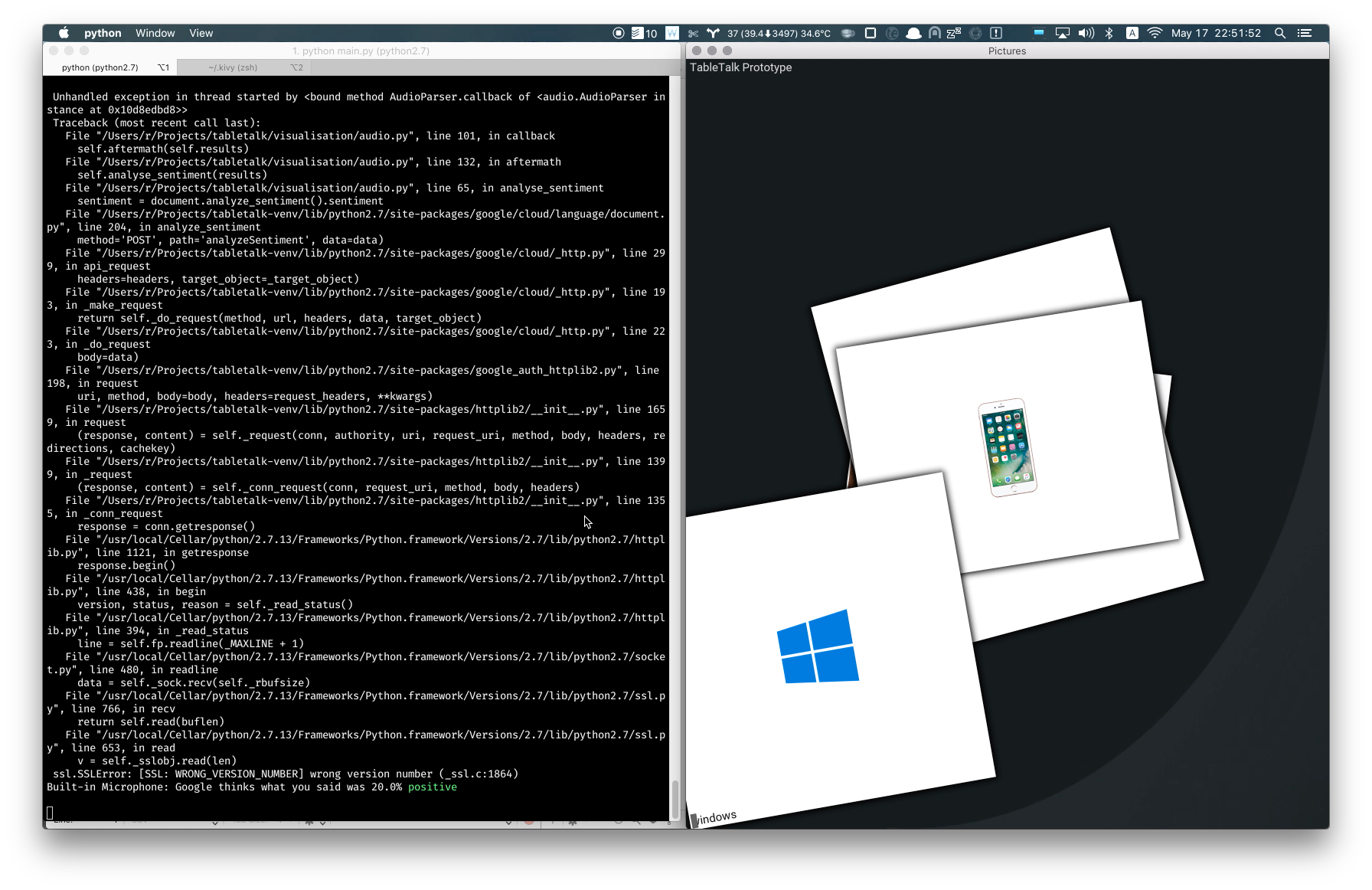

At first we were dumbfounded what to use and how this would work. We were thinking of using speech recognition technologies but unsure that it would create a good experience. What is important to us is to maintain the normal dynamic conversation in a group, to make the TableTalk invisible to the conversation until it is needed. Our experience beforehand (with the likes of Siri and Google Assistant) did not reflect it. After trying it out, turns out continuous speech recognition works better than expected. We found that by continuously listening to the conversation yielded the best results. By shortening the response time, users do not have to wait and pause their sentences to wait for the system to respond.

Development Process

My responsibility in this project is being the developer with Jordan. I took the part of being the developer because I have experience in Python and Jordan have experience with audio manipulation. So we worked on the backend and the frontend. The backend consisting of the speech recognition, natural language, and search API processing and the frontend being the Kivy UI library that we are using. I worked more on making the TableTalk reliable rather than adding features because in our own usage having a non-responsive (or half-responsive) TableTalk is not a good experience.

TableTalk on UQ Interaction Expo 2017

We did not conduct any formal user testing before the exhibition, so the exhibition is actually the first time we can observe new users interacting with TableTalk. The response was overwhelmingly good. Users of various age start interacting with the table without too much instructions. They also come up with their own uses of TableTalk which becomes a source of inspiration for us to develop TableTalk further.

From our initial aim and my own observation at the exhibition, I want to find out more what would be a great brainstorm tool. My report discusses more about TableTalk at its current state as a Group Support System and potential improvements for it to be an ideal GSS.

References:\

Cherubini, Mauro. “Conversation Table: Visual Representation of conversational dynamics” Mauro Cherubini’s moleskine. (2006) http://www.i-cherubini.it/mauro/blog/2006/02/15/conversation- table-visual-representation-of-conversational-dynamics/ [accessed 30th March 2017]

Bachour, Khaled, Frédéric Kaplan, and Pierre Dillenbourg. “Reflect: An Interactive Table for Regulating Face-to-Face Collaborative Learning.” Times of Convergence. Technologies Across Learning Contexts Lecture Notes in Computer Science: 39-48. Print.

Yunho, Choi, and P. Karam. “Interactive Talk Table - Interactive table with multi-touch and Physical computing” (2009) https://www.youtube.com/watch?v=wZdelRBYRnc [accessed 30th March 2017]